pip3 install -U agno openai duckduckgo-search newspaper4k lxml_html_clean ddgs

Use Agno with AI Proxy in AI Gateway

Configure the AI Proxy plugin on a AI Gateway Route to forward OpenAI-compatible requests to OpenAI, and set Agno’s base_url to that Route. This lets you use Agno’s research agents with Kong plugins—such as logging, rate limiting, prompt decoration, and access control.

Prerequisites

Kong Konnect

This is a Konnect tutorial and requires a Konnect personal access token.

-

Create a new personal access token by opening the Konnect PAT page and selecting Generate Token.

-

Export your token to an environment variable:

export KONNECT_TOKEN='YOUR_KONNECT_PAT'Copied! -

Run the quickstart script to automatically provision a Control Plane and Data Plane, and configure your environment:

curl -Ls https://get.konghq.com/quickstart | bash -s -- -k $KONNECT_TOKEN --deck-outputCopied!This sets up a Konnect Control Plane named

quickstart, provisions a local Data Plane, and prints out the following environment variable exports:export DECK_KONNECT_TOKEN=$KONNECT_TOKEN export DECK_KONNECT_CONTROL_PLANE_NAME=quickstart export KONNECT_CONTROL_PLANE_URL=https://us.api.konghq.com export KONNECT_PROXY_URL='http://localhost:8000'Copied!Copy and paste these into your terminal to configure your session.

Kong Gateway running

This tutorial requires Kong Gateway Enterprise. If you don’t have Kong Gateway set up yet, you can use the quickstart script with an enterprise license to get an instance of Kong Gateway running almost instantly.

-

Export your license to an environment variable:

export KONG_LICENSE_DATA='LICENSE-CONTENTS-GO-HERE'Copied! -

Run the quickstart script:

curl -Ls https://get.konghq.com/quickstart | bash -s -- -e KONG_LICENSE_DATACopied!Once Kong Gateway is ready, you will see the following message:

Kong Gateway Ready

decK v1.43+

decK is a CLI tool for managing Kong Gateway declaratively with state files. To complete this tutorial, install decK version 1.43 or later.

This guide uses deck gateway apply, which directly applies entity configuration to your Gateway instance.

We recommend upgrading your decK installation to take advantage of this tool.

You can check your current decK version with deck version.

Required entities

For this tutorial, you’ll need Kong Gateway entities, like Gateway Services and Routes, pre-configured. These entities are essential for Kong Gateway to function but installing them isn’t the focus of this guide. Follow these steps to pre-configure them:

-

Run the following command:

echo ' _format_version: "3.0" services: - name: example-service url: http://httpbin.konghq.com/anything routes: - name: example-route paths: - "/anything" service: name: example-service ' | deck gateway apply -Copied!

To learn more about entities, you can read our entities documentation.

OpenAI

This tutorial uses OpenAI:

- Create an OpenAI account.

- Get an API key.

-

Create a decK variable with the API key:

export DECK_OPENAI_API_KEY='YOUR OPENAI API KEY'Copied!

Python

To complete this tutorial, you’ll need Python (version 3.7 or later) and pip installed on your machine. You can verify it by running:

python3

python3 -m pip --version

-

Create a virtual env:

python3 -m venv myenvCopied! -

Activate it:

source myenv/bin/activateCopied!

Configure the AI Proxy plugin

Enable the AI Proxy plugin with your OpenAI API key and model details to route Agno’s OpenAI-compatible requests through AI Gateway. In this example, we’ll use the gpt-4.1 model from OpenAI.

echo '

_format_version: "3.0"

plugins:

- name: ai-proxy

config:

route_type: llm/v1/chat

auth:

header_name: Authorization

header_value: Bearer ${{ env "DECK_OPENAI_API_KEY" }}

model:

provider: openai

name: gpt-4.1

' | deck gateway apply -

Make sure that the AI Proxy plugin and the Agno script are configured to use the same OpenAI model.

Install required packages

Install the necessary Python packages for running the Agno’s research agent:

Create an Agno script for research agent

Use the following command to create a file named research-agent.py containing an Agno Python script:

cat <<EOF > research-agent.py

import os

from textwrap import dedent

from agno.agent import Agent

from agno.models.openai import OpenAILike

from agno.tools.duckduckgo import DuckDuckGoTools

from agno.tools.newspaper4k import Newspaper4kTools

from agno.models.openai.chat import Message

import os

model = OpenAILike(

base_url="http://localhost:8000/anything",

name="gpt-4.1",

id="gpt-4.1",

api_key=os.getenv("DECK_OPENAI_API_KEY")

)

research_agent = Agent(

model=model,

tools=[DuckDuckGoTools(fixed_max_results=2), Newspaper4kTools(article_length=500)],

description=dedent("""\

You are a historical analyst with deep expertise in ancient and medieval history.

Your expertise includes:

- Synthesizing academic research and primary sources

- Analyzing military, economic, and political systems

- Identifying root causes of societal collapse or transformation

- Evaluating the role of leadership, ideology, and religion

- Presenting competing historical perspectives

- Providing clear, source-backed historical narratives

- Explaining long-term implications and legacy

"""),

instructions=dedent("""\

1. Research Phase 📚

- Locate academic analyses, historical summaries, and expert commentary

- Identify internal and external factors contributing to the fall

- Note military conflicts, economic instability, and political fragmentation

2. Analysis Phase 🔍

- Weigh the long-term structural issues versus short-term triggers

- Consider geopolitical pressures, internal weaknesses, and cultural shifts

- Highlight contributions of leadership decisions and external actors

3. Reporting Phase 📝

- Write a compelling executive summary and clear narrative

- Structure by thematic causes (military, political, economic, religious)

- Include quotes or viewpoints from notable historians

- Present lessons learned or possible historical counterfactuals

4. Review Phase ✔️

- Validate all claims against reputable sources

- Ensure neutrality and historical rigor

- Provide a bibliography or references list

"""),

expected_output=dedent("""\

# The Fall of the Byzantine Empire: A Tapestry of Decline and Siege ⚔️

## Executive Summary

{Short summary}

## Introduction

{Short historical background}

## Causes of Decline

{Two causes}

---

Report by Historical Analysis AI

Published: {current_date}

Last Updated: {current_time}

"""),

markdown=True,

)

if __name__ == "__main__":

prompt = "What were the main causes of the fall of the Byzantine Empire?"

print("The Agent Chronicler is compiling historical manuscripts ...\n")

research_agent.print_response(

prompt,

stream=True,

)

EOF

cat <<EOF > research-agent.py

import os

from textwrap import dedent

from agno.agent import Agent

from agno.models.openai import OpenAILike

from agno.tools.duckduckgo import DuckDuckGoTools

from agno.tools.newspaper4k import Newspaper4kTools

from agno.models.openai.chat import Message

model = OpenAILike(

base_url=os.getenv("KONG_PROXY_URL"),

name="gpt-4.1",

id="gpt-4.1",

api_key=os.getenv("DECK_OPENAI_API_KEY"),

)

research_agent = Agent(

model=model,

tools=[DuckDuckGoTools(), Newspaper4kTools()],

description=dedent("""\

You are a historical analyst with deep expertise in ancient and medieval history.

Your expertise includes:

- Synthesizing academic research and primary sources

- Analyzing military, economic, and political systems

- Identifying root causes of societal collapse or transformation

- Evaluating the role of leadership, ideology, and religion

- Presenting competing historical perspectives

- Providing clear, source-backed historical narratives

- Explaining long-term implications and legacy

"""),

instructions=dedent("""\

1. Research Phase 📚

- Locate academic analyses, historical summaries, and expert commentary

- Identify internal and external factors contributing to the fall

- Note military conflicts, economic instability, and political fragmentation

2. Analysis Phase 🔍

- Weigh the long-term structural issues versus short-term triggers

- Consider geopolitical pressures, internal weaknesses, and cultural shifts

- Highlight contributions of leadership decisions and external actors

3. Reporting Phase 📝

- Write a compelling executive summary and clear narrative

- Structure by thematic causes (military, political, economic, religious)

- Include quotes or viewpoints from notable historians

- Present lessons learned or possible historical counterfactuals

4. Review Phase ✔️

- Validate all claims against reputable sources

- Ensure neutrality and historical rigor

- Provide a bibliography or references list

"""),

expected_output=dedent("""\

# The Fall of the Byzantine Empire: A Tapestry of Decline and Siege ⚔️

## Executive Summary

{Short summary}

## Introduction

{Short historical background}

## Causes of Decline

{Two causes}

---

Report by Historical Analysis AI

Published: {current_date}

Last Updated: {current_time}

"""),

markdown=True,

show_tool_calls=True,

add_datetime_to_instructions=True,

)

if __name__ == "__main__":

prompt = "What were the main causes of the fall of the Byzantine Empire?"

print("The Agent Chronicler is compiling historical manuscripts ...\n")

research_agent.print_response(

prompt,

stream=True,

)

EOF

With the base_url parameter, we can override the OpenAI base URL that LangChain uses by default with the URL to our Kong Gateway Route. This way, we can proxy requests and apply Kong Gateway plugins, while also using Agno integrations and tools.

Validate

Run your script to validate that Agno agent can access the Route:

python3 research-agent.py

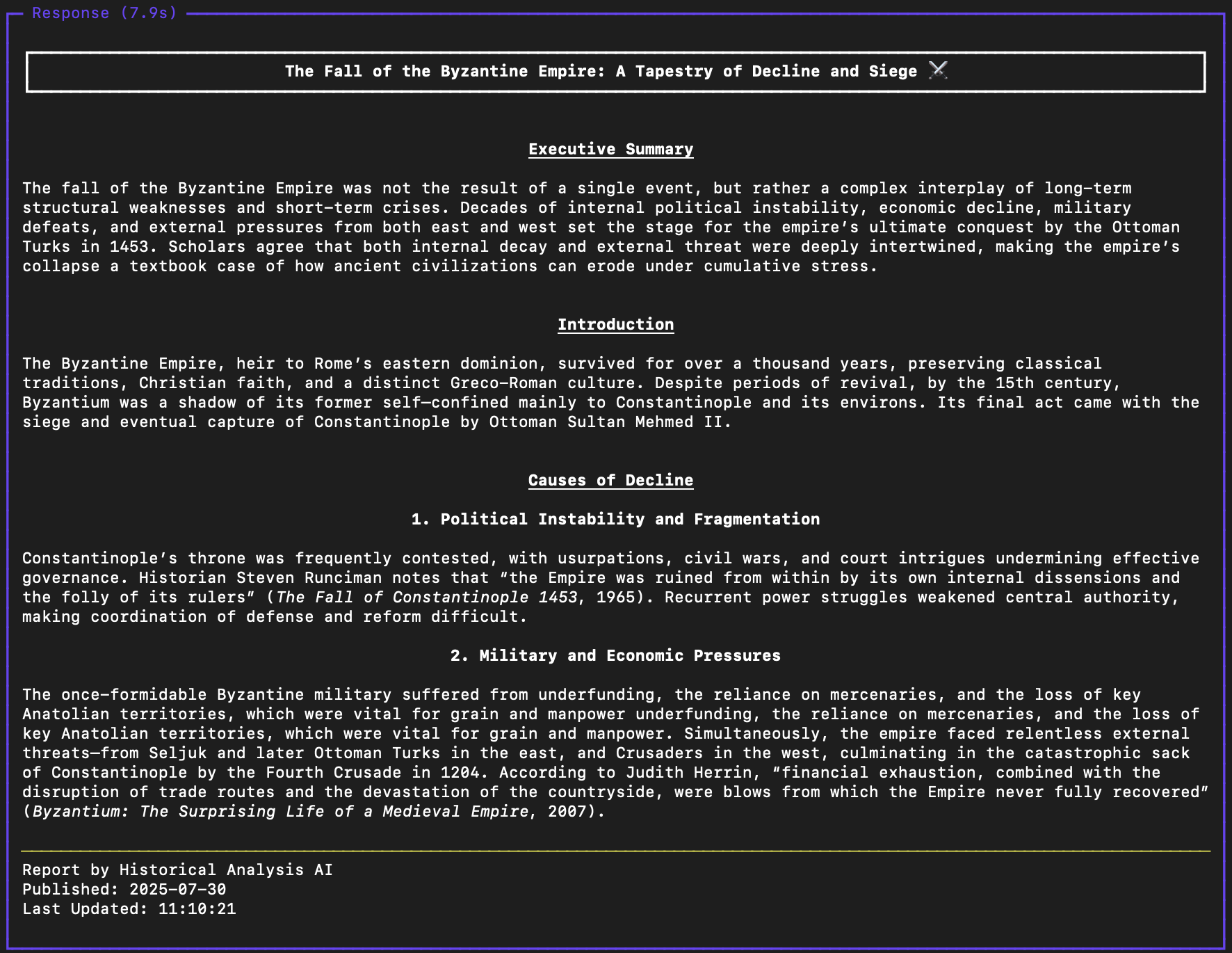

The response should look like this:

Cleanup

Clean up Konnect environment

If you created a new control plane and want to conserve your free trial credits or avoid unnecessary charges, delete the new control plane used in this tutorial.

Destroy the Kong Gateway container

curl -Ls https://get.konghq.com/quickstart | bash -s -- -d